This post sums up some of my work on MySQL resilience and high availability at Booking.com by presenting the current state of automated master and intermediate master recoveries via Pseudo-GTID & Orchestrator.

Booking.com uses many different MySQL topologies, of varying vendors, configurations and workloads: Oracle MySQL, MariaDB, statement based replication, row based replication, hybrid, OLTP, OLAP, GTID (few), no GTID (most), Binlog Servers, filters, hybrid of all the above.

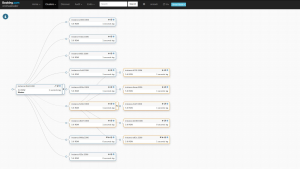

Topologies size varies from a single server to many-many-many. Our typical topology has a master in one datacenter, a bunch of slaves in same DC, a slave in another DC acting as an intermediate master to further bunch of slaves in the other DC. Something like this, give or take:

However as we are building our third data center (with MySQL deployments mostly completed) the graph turns more complex.

Two high availability questions are:

- What happens when an intermediate master dies? What of all its slaves?

- What happens when the master dies? What of the entire topology?

This is not a technical drill down into the solution, but rather on overview of the state. For more, please refer to recent presentations in September and April.

At this time we have:

- Pseudo-GTID deployed on all chains

- Injected every 5 seconds

- Using the monotonically ascending variation

- Pseudo-GTID based automated failover for intermediate masters on all chains

- Pseudo-GTID based automated failover for masters on roughly 30% of the chains.

- The rest of 70% of chains are set for manual failover using Pseudo-GTID.

Pseudo-GTID is in particular used for:

- Salvaging slaves of a dead intermediate master

- Correctly grouping and connecting slaves of a dead master

- Routine refactoring of topologies. This includes:

- Manual repointing of slaves for various operations (e.g. offloading slaves from a busy box)

- Automated refactoring (for example, used by our automated upgrading script, which consults with orchestrator, upgrades, shuffles slaves around, updates intermediate master, suffles back…)

- (In the works), failing over binlog reader apps that audit our binary logs.

Furthermore, Booking.com is also working on Binlog Servers:

- These take production traffic and offload masters and intermediate masters

- Often co-serve slaves using round-robin VIP, such that failure of one Binlog Server makes for simple slave replication self-recovery.

- Are interleaved alongside standard replication

- At this time we have no “pure” Binlog Server topology in production; we always have normal intermediate masters and slaves

- This hybrid state makes for greater complexity:

- Binlog Servers are not designed to participate in a game of changing masters/intermediate master, unless successors come from their own sub-topology, which is not the case today.

- For example, a Binlog Server that replicates directly from the master, cannot be repointed to just any new master.

- But can still hold valuable binary log entries that other slaves may not.

- Are not actual MySQL servers, therefore of course cannot be promoted as masters

- Binlog Servers are not designed to participate in a game of changing masters/intermediate master, unless successors come from their own sub-topology, which is not the case today.

Orchestrator & Pseudo-GTID makes this hybrid topology still resilient:

- Orchestrator understands the limitations on the hybrid topology and can salvage slaves of 1st tier Binlog Servers via Pseudo-GTID

- In the case where the Binlog Servers were the most up to date slaves of a failed master, orchestrator knows to first move potential candidates under the Binlog Server and then extract them out again.

- At this time Binlog Servers are still unstable. Pseudo-GTID allows us to comfortably test them on a large setup with reduced fear of losing slaves.

Otherwise orchestrator already understands pure Binlog Server topologies and can do master promotion. When pure binlog servers topologies will be in production orchestrator will be there to watch over.

Summary

To date, Pseudo-GTID has high scores in automated failovers of our topologies; orchestrator’s holistic approach makes for reliable diagnostics; together they reduce our dependency on specific servers & hardware, physical location, latency implied by SAN devices.