This comes mostly to reassure, having moved into GitHub: orchestrator development continues.

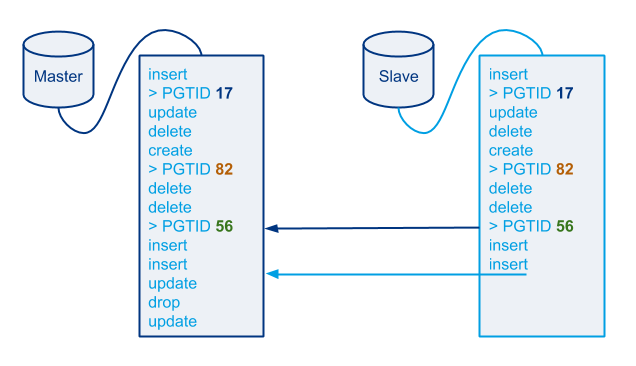

I will have the privilege of working on this open source solution in GitHub. There are a few directions we can take orchestrator to, and we will be looking into the possibilities. We will continue to strengthen the crash recovery process, and in fact I’ve got a couple ideas on drastically shortening Pseudo-GTID recovery time as well as other debts. We will look into yet other directions, which we will share. My new and distinguished team will co-work on/with orchestrator and will no doubt provide useful and actionable input.

Orchestrator continues to be open for pull requests, with a temporal latency in response time (it’s the Holidays, mostly).

Some Go(lang) limitations (namely the import path, I’ll blog more about it) will most probably imply some changes to the code, which will be well communicated to existing collaborators.

Most of all, we will keep orchestrator a generic solution, while keeping focus on what we think is most important – and there’s some interesting vision here. Time will reveal as we make progress.